Spark Analysis on a Large File

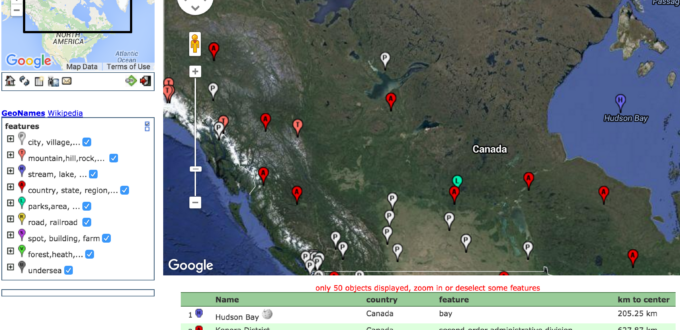

GeoNames.org has free gazetteer data by country or for the world, provided in tab-separated text files. In this post I show you how to do some simple analysis using DataFrames in Spark. As the global file is 280M compressed and 1.2G uncompressed. This size of file makes it difficult to do data analysis until you have it into a database or similar analysis platform. Enter Spark…

The file I’m using is allCountries.txt but I suggest grabbing one of the smaller files just for your country of interest while testing. I’ve unzipped it manually before doing the analysis. As a homework exercise, the reader is left to figure out how to use one of the other binary readers to interact with the .zip file directly.

Spark Shell Install & Startup

I’m running on a MacBook and installed Spark using the command:

brew install spark

As my allCountries file is quite large and I’m not using other machines to help with the analysis, I need to give the Spark shell lots of memory for processing. So to start the shell:

spark-shell --driver-memory 2G

Setting RDD Schema

There are many columns in the geonames dataset, but I’m only interested in the second one, the place name itself. As there is no schema information stored in the text file itself, we have to define it up front:

case class geoname(locname: String)

I do it by defining a class called “geoname” that has a single (string) column in it called “locname”.

Now when we tell it how to read the text file, we can focus on mapping only the second column into the schema we’ve defined:

val locnames = sc.textFile("allCountries.txt").map(_.split("\t")).map(p => geoname(p(1)))

Note that this likely has done nothing on your computer and was instantaneous – because Spark won’t do anything with the actual data until it has to. It does not just load it all into memory and then wait. Instead it waits until you do a grouping or sort or output stage and then executes. We can initiate this by just asking for the first record to be printed or doing a record count:

locnames.first OUTPUT: res1: geoname = geoname(Pic de Font Blanca) locnames.count OUTPUT: res4: Long = 10131643

Querying a DataFrame in Spark

Lots can be done in the RDD version of the data but to do more analytics we want to work with DataFrames.

On Windows I had to use the following two lines before creating the DataFrame, or you’ll get “value toDF is not a member of org.apache.spark.rdd.RDD”.

On OSX I did not have to use it. It may be a version difference, but I didn’t check:

val sqlContext= new org.apache.spark.sql.SQLContext(sc) import sqlContext.implicits._

As we already have a schema defined, it’s as simple as a single statement:

val locdf = locnames.toDF()

Now that we have a DataFrame we can do use some more functions. In this case I want to count distinct usages of the place names and then print the top 20 most popular ones (sort descending on count).

locdf.groupBy("locname").count.sort($"count".desc).show

locname count

First Baptist Church 2631

San Antonio 2244

The Church of Jes... 2178

San José 1914

Church of Christ 1657

Santa Rosa 1619

Mill Creek 1531

Spring Creek 1506

La Esperanza 1464

San Francisco 1464

San Isidro 1407

San Juan 1367

First Presbyteria... 1338

Dry Creek 1260

San Miguel 1214

Krajan 1151

Mount Zion Church 1100

Stormyra 1097

San Pedro 1091

Bear Creek 1081

So there you have it – the most popular place names in the world! Odd are you have one of them near you 🙂

I ran the same analysis but just on the Canadian place names and found it, socially, interesting to see that the results where all about physical geography and not about cultural locations.

locname count Long Lake 199 Mud Lake 166 Long Pond 148 Lac Long 131 Green Island 121 Long Point 116 Big Island 116 The Narrows 110 Otter Lake 105 Round Lake 105 Lac Rond 103 Little Lake 99 Moose Lake 99 Black Rock 95 Long Island 94 Lac à la Truite 92 Gull Pond 92 Burnt Island 92 Twin Lakes 90 Island Lake 87

NOTE: For a similar but more in-depth Spark tutorial check out this great one from MapR – “Using Apache Spark DataFrames for Processing of Tabular Data“.

- Geography + Data - July 15, 2021

- DIY Battery – Weekend Project – Aluminum + Bleach? - January 17, 2021

- It’s all about the ecosystem – build and nurture yours - May 1, 2020

- Learnings from TigerGraph and Expero webinar - April 1, 2020

- 4 Webinars This Week – GPU, 5G, graph analytics, cloud - March 30, 2020

- Diving into #NoSQL from the SQL Empire … - February 28, 2017

- VID: Solving Performance Problems on Hadoop - July 5, 2016

- Storing Zeppelin Notebooks in AWS S3 Buckets - June 7, 2016

- VirtualBox extension pack update on OS X - April 11, 2016

- Zeppelin Notebook Quick Start on OSX v0.5.6 - April 4, 2016

Fixed portion: map(p => geoname(p(1)))

There was an annoying HTML escape in there. You definitely do not want “p =>”.