The Zeppelin project provides a powerful web-based notebook platform for data analysis and discovery. Behind the scenes it supports Spark distributed contexts as well as other language bindings on top of Spark.

This post is a very simple introduction to show the first few steps to get started. You’ll find all you need to know about further details on the Zeppelin site or in blogs and videos elsewhere.

Official install README is here – but I will add a few notes about my own experience to get it working well.

Download and Build

- My environment is OSX 10.10 and I previously installed Maven (3.3.0) via Brew and I have a Spark 1.3.1 install as well. I’m running in a standalone mode, not with Spark on a cluster.

- Get the Zeppelin source package from Github (direct zip file link), unzip and open Terminal and change into that folder:

-

cd ~/Downloads/incubator-zeppelin-master

-

- Launch Maven build process, including flag that specifies the Spark version I’m running:

-

mvn clean install -Pspark-1.3 -DskipTests

-

- Get a coffee while tons of packages get installed. Or two coffees actually.

- If all goes well, launch zeppelin:

-

bin/zeppelin-daemon.sh start

-

- Check out the Zeppelin web notebook at http://localhost:8080

Test Interpreters – Spark, Scala, Bash, Markdown

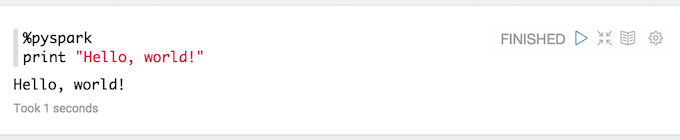

The notebook page has text boxes that you can type commands into and a little PLAY button to the right of the text box that executes the commands. Zeppelin supports various languages, out of the box, including Spark, BASH, Markdown and more.

Test the install by running a few of these interesting commands in one of the windows. The metadata first line (a la “shebang” style) specifies what language you are writing in. Scala appears to be the default.

Spark Interpreter Test

To confirm that a Spark context is being properly created, just run the sc command. Note, no header line required.

Scala Interpreter Test

Hello World test in Scala is a simple test using the println() function, note no header line required:

BASH Interpreter Test

Yes, it’s cool, you can call out to BASH really really easily, just include the %sh header line and a command:

Markdown Interpreter Test

Don’t ask me why they support Markdown out-of-the-box, but I’m guessing it’s for marking up your notebook with comments etc:

Setup Python Interpreter

Python won’t necessarily run easily right away. I had to do a couple things first.

- Installpy4j module in system Python

-

sudo pip install py4j

-

- Explicitly set the spark.home variable.

- Click on the Interpreter button on the notebook web page and a form will appear.

- Select Edit in the top right corner, and enter the path to your Spark folder in the spark.home box. It’s blank by default.

(My Spark environment was merely a download of their zip file extracted to a folder) - Hit Save below the form and it will restart the server for you.

Now a simple Python test should work:

Next Steps

In the next post, follow along as I learn how to use Spark and Zeppelin through the built in tutorial. Thanks for reading and I always look forward to any comments here or on Twitter.

- Geography + Data - July 15, 2021

- DIY Battery – Weekend Project – Aluminum + Bleach? - January 17, 2021

- It’s all about the ecosystem – build and nurture yours - May 1, 2020

- Learnings from TigerGraph and Expero webinar - April 1, 2020

- 4 Webinars This Week – GPU, 5G, graph analytics, cloud - March 30, 2020

- Diving into #NoSQL from the SQL Empire … - February 28, 2017

- VID: Solving Performance Problems on Hadoop - July 5, 2016

- Storing Zeppelin Notebooks in AWS S3 Buckets - June 7, 2016

- VirtualBox extension pack update on OS X - April 11, 2016

- Zeppelin Notebook Quick Start on OSX v0.5.6 - April 4, 2016

[…] is a follow-up to my post from last year Apache Zeppelin on OSX – Ultra Quick Start but without building from source. Today I tested the latest version of Zeppelin (0.5.6) and, […]

[…] The most useful resource is at: http://www.makedatauseful.com/apache-zeppelin-on-osx-ultra-quick-start/ […]